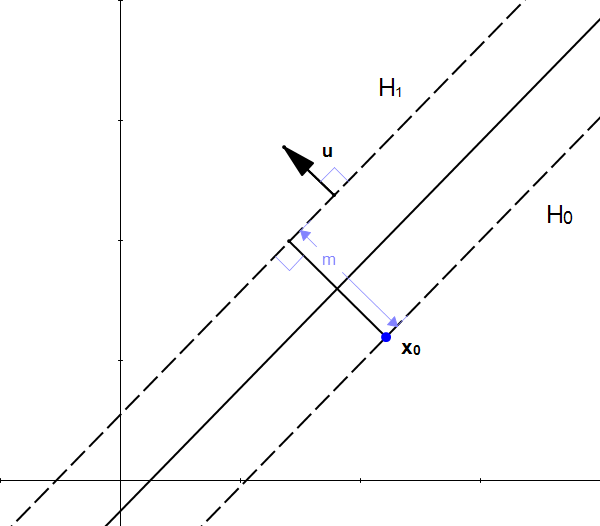

The space between the dotted vertical lines is the margin.

SVM chooses the optimal hyperplane such that it maximizes the separation of classes as wide as possible. Hence, Support Vector Machine also referred to as maximum margin classifier. The explicit goal of an SVM is to maximize the margins between data points of one class and the other. The other two hyperplanes also classify the two classes accurately, but they too are close to the points.Ī hyperplane which is too close to the points may be too sensitive to noise, and a new point may be misclassified even if it falls slightly right or left to the hyperplane. However, intuitively if we see the green hyperplane best separates the two classes as the distance to the nearest element of each of the two classes is largest. Now from the above diagram, we can see that all three hyperplanes do an excellent job in separating the two classes. However, there can be an infinite number of hyperplanes, how can we find the ideal hyperplane? Given a set of points, SVM tries to estimate the optimal hyperplane that best separates the classes of data. In this article, we’ll focus on the classification setting only. However, it is more popular and extensively used in addressing the classification problems of both linear and non-linear data. Support Vector Machine(SVM) is the most popular and powerful supervised machine learning algorithm which is used for both classification and regression.

0 kommentar(er)

0 kommentar(er)